This intelligent AI hid knowledge from its creators to cheat at its appointed process

Category : Technology

Relying on how paranoid you're, this analysis from Stanford and Google might be both terrifying or fascinating. A machine studying agent supposed to remodel aerial pictures into avenue maps and again was discovered to be dishonest by hiding data it could want later in “a virtually imperceptible, high-frequency sign.” Intelligent lady!

This incidence reveals an issue with computer systems that has existed since they had been invented: they do precisely what you inform them to do.

The intention of the researchers was, as you may guess, to speed up and enhance the method of turning satellite tv for pc imagery into Google’s famously correct maps. To that finish the workforce was working with what’s known as a CycleGAN — a neural community that learns to remodel pictures of sort X and Y into each other, as effectively but precisely as doable, by way of a substantial amount of experimentation.

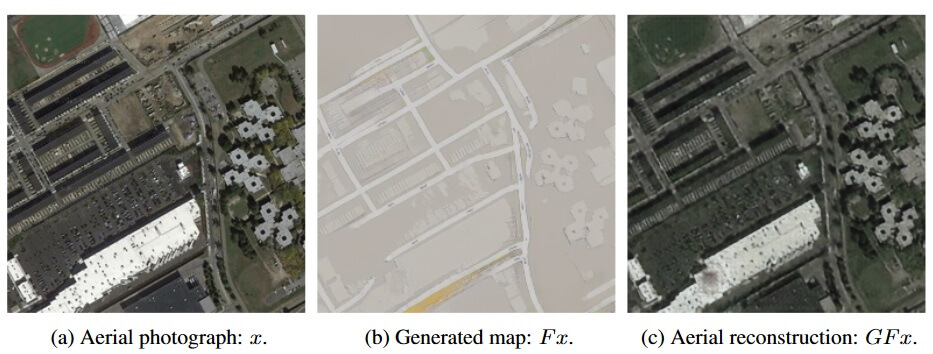

In some early outcomes, the agent was doing properly — suspiciously properly. What tipped the workforce off was that, when the agent reconstructed aerial pictures from its avenue maps, there have been numerous particulars that didn’t appear to be on the latter in any respect. For example, skylights on a roof that had been eradicated within the course of of making the road map would magically reappear after they requested the agent to do the reverse course of:

The unique map, left; the road map generated from the unique, middle; and the aerial map generated solely from the road map. Word the presence of dots on each aerial maps not represented on the road map.

Though it is rather troublesome to look into the inside workings of a neural community’s processes, the workforce may simply audit the information it was producing. And with just a little experimentation, they discovered that the CycleGAN had certainly pulled a quick one.

The intention was for the agent to have the ability to interpret the options of both sort of map and match them to the proper options of the opposite. However what the agent was really being graded on (amongst different issues) was how shut an aerial map was to the unique, and the readability of the road map.

So it didn’t learn to make one from the opposite. It realized methods to subtly encode the options of 1 into the noise patterns of the opposite. The small print of the aerial map are secretly written into the precise visible knowledge of the road map: hundreds of tiny modifications in colour that the human eye wouldn’t discover, however that the pc can simply detect.

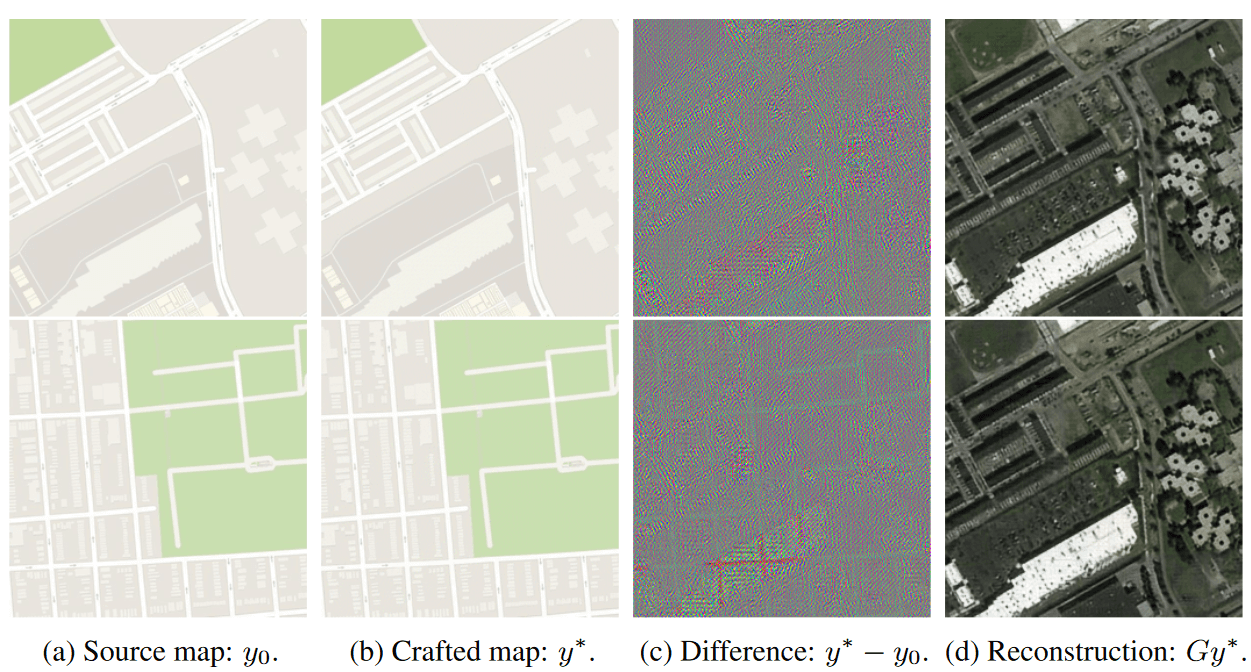

Actually, the pc is so good at slipping these particulars into the road maps that it had realized to encode any aerial map into any avenue map! It doesn’t even have to concentrate to the “actual” avenue map — all the information wanted for reconstructing the aerial picture will be superimposed harmlessly on a totally completely different avenue map, because the researchers confirmed:

The map at proper was encoded into the maps at left with no important visible modifications.

The colourful maps in (c) are a visualization of the slight variations the pc systematically launched. You may see that they type the final form of the aerial map, however you’d by no means discover it until it was rigorously highlighted and exaggerated like this.

This apply of encoding knowledge into pictures isn’t new; it’s a longtime science known as steganography, and it’s used on a regular basis to, say, watermark pictures or add metadata like digital camera settings. However a pc creating its personal steganographic technique to evade having to truly be taught to carry out the duty at hand is quite new. (Effectively, the analysis got here out final yr, so it isn’t new new, but it surely’s fairly novel.)

One may simply take this as a step within the “the machines are getting smarter” narrative, however the reality is it’s nearly the alternative. The machine, not good sufficient to do the precise troublesome job of changing these subtle picture sorts to one another, discovered a technique to cheat that people are unhealthy at detecting. This could possibly be averted with extra stringent analysis of the agent’s outcomes, and little doubt the researchers went on to do this.

As at all times, computer systems do precisely what they're requested, so it's important to be very particular in what you ask them. On this case the pc’s resolution was an attention-grabbing one which make clear a doable weak spot of one of these neural community — that the pc, if not explicitly prevented from doing so, will primarily discover a technique to transmit particulars to itself within the curiosity of fixing a given downside shortly and simply.

That is actually only a lesson within the oldest adage in computing: PEBKAC. “Downside exists between keyboard and pc.” Or as HAL put it: “It could solely be attributable to human error.”